Keywords:

By Michael Fejtl

Successful assay development is of utmost importance for cost-efficient drug discovery. In vitro and cell-based assays serve as a first step to evaluate the biological effects of chemical compounds by cellular, molecular or biochemical approaches. The derived assay readouts may be relevant to human health and disease and can identify potential therapeutic candidates in the drug development pipeline. Ensuring minimal cycle times for assay development is an essential step in making a drug discovery program more cost-efficient. In this article, we present the key challenges for reducing cycle time of assay development and what it takes to solve them.

Reducing assay development cycle times can accelerate drug development projects and prevent costly delays.

Why rigorous assay development really matters

“Fail early, fail fast”: Rigorous assay development can help prevent the staggering losses incurred by late-stage failures.

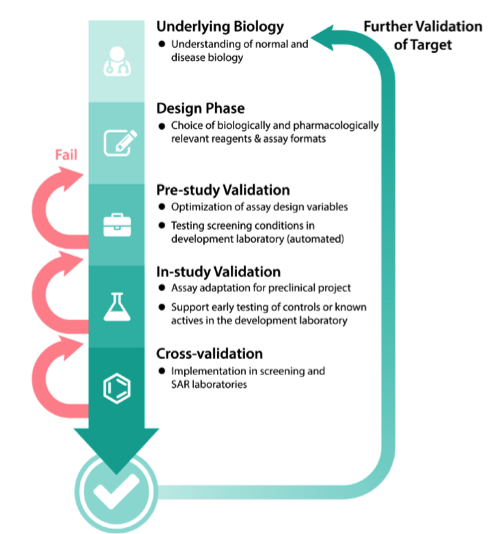

A drug discovery program begins with the design phase of understanding normal and disease biology to define the associated target. Multiple validation steps follow during assay development. Failure to validate the assay at any of these steps will increase the number of cycles and result in increased assay time and associated costs.

Above all, the propensity for late-stage failures has led to a tremendous increase in the overall cost of drug development over the last 15 years. At the same time, it is generally acknowledged that increasing scientific rigor in the discovery, preclinical, and early clinical stages is the best way to prevent late-stage failures. As the estimates below show, discovery and development of new chemical entities is costly and rises rapidly as candidates are progressed to successive stages.¹ Effective assays – especially those run in the earlier pre-clinical stages – can help rule out undesirable compounds before too much has been invested in them.

Costs of discovery and development of new chemical entities for cancer drug discovery

Target identification: $200,000

Target validation, approx.: $468,500

Identification of actives: $941,000

Confirmation of hits: $1,463,000

Identification of chemical lead: $1,816,300

Selection of optimized chemical lead: $2,118,800

Selection of development candidate: $2,393,800

Pre-IND Meeting with FDA (for non-oncology projects only): $2,430,800

File IN: $3,210,800

Human proof of concept: $4,210,800

Clinical proof of concept (n=2): $9,210,800

Cycles of improvement in the development of cell-based and bioassays

The assay development and optimization process is a cycle in which several variables are tested, and the parameters that give a better “reading window” are fixed. Further parameters are then tested under these fixed conditions. For example, the optimal detergent concentration to use may differ with varying pH.

With each iteration of assay optimization, a key goal is to improve reproducibility and statistical performance of the assay by finding conditions that increase the signal-to-noise ratio derived from positive and negative controls. The optimization process includes decreasing the inter-well variations that occur due to factors such as pipetting errors and temperature gradients across the microtiter plate. It’s also important to remember that an enzyme may be inactivated due to instability at a given pH or temperature.

Gathering robust data is thus the basis for all subsequent steps to elucidate the mechanism of action of a lead compound. A constant temperature and environment during long-term kinetic assay readouts is critical to achieving consistent and robust results.

Source: NCATS Assay Guidance Manual

Improve temperature control for better reproducibility

A stable temperature is a prerequisite for reliable bioassay results, especially when performing enzymatic reactions such as luciferase assays or studying assay kinetics in live organisms. Assay protocols frequently specify “ambient temperature” or “room temperature”, but unfortunately most commercial detectors are equipped with active heating only. This means that assays run at ambient temperatures may be subject to fluctuations in the lab environment and within the instrument itself.

Moving parts inside a microplate reader, e. g. motors, can generate a considerable amount of heat, which can negatively affect assay reproducibility. Temperature gradients can also occur across a microplate with many readers, leading to poor precision and variability². For this reason, it’s a good idea to look for a plate reader that enables active cooling as well as heating. Tecan’s unique Te-Cool™ technology enables an active temperature regulation between 18–42 °C, and a maximum of 12 °C below ambient temperature (18 °C - 30 °C) to overcome these challenges.

Smart technology enables Te-Cool™ to match the ambient temperature in the lab with the temperature inside the microplate reader. By allowing the reader temperature to be set at or below ambient, researchers have precise control even at ambient temperatures, which ensures more accurate and reliable results. This added control enables optimal assay performance and lowers the need for repetition of experiments.

Optimize assays within physiologically relevant parameters

Bioassay and cell-based assay development should represent in vivo conditions as closely as possible. Various parameters in biochemical tests or cell-based model systems can be optimized, but this doesn’t always make sense when keeping in mind what you are trying to accomplish with your research.

The danger of using solely statistical parameters (such as Z’) in assay optimization is that these do not take into account the desired physiological mechanism of the action in determining the optimal reagent concentration. For example, the reagent or salt concentration required for optimal in vitro assay performance and reproducibility may be far different from the conditions a cell would encounter in the human body. For this reason, it is important to use statistical performance metrics in a way that is consistent with the desired mechanism of action under physiological conditions.

Overcome challenges of fluorescence-based assays

Fluorescence techniques in assay development are far more popular than absorbance-based methods in a micro-titer plate format. There are two main reasons: the very short pathlength found in miniaturized assays and the effect of the pathlength on signal strength (A=εCL), causing miniaturized absorbance-based assays to lose their signal window.

For example, in 1,536-well plates at a typical assay volume of 5 μL the path length of light is ~0.2–0.25 cm, while in a 384-well plate with a typical assay volume of 40 μL, the path length is approximately 0.5 cm. To obtain a robust signal, either the extinction coefficient ε or the concentration C or both, need to be high.

Although fluorescent assays are very useful in assay development, an issue with these assays is that they are susceptible to interference from compounds that either absorb light in the excitation or emission range of the assay, or that compounds are themselves fluorescent, resulting in false negatives. These artefacts can become significant at typical compound concentrations greater than 1 μM.

Minimize interference: redder is better

One approach to minimizing interference is the use of red-shifted fluorophores possessing longer wavelengths, e. g. Alexa Fluor® 647, which reduces compound interference but requires a sensitive detection instrument with a broad spectral range. The Spark® multimode reader has enhanced PMTs that are optimized for far-red dyes. A comparative evaluation of the device for far-red fluorescence emission demonstrates superior performance for the detection of the far-red fluorescent dye Alexa Fluor® 647 (2). The percentage of compounds that fluoresce in the blue emission range has been estimated to be as high as 5% in typical low molecular weight libraries. This value drops to <0.1% at emission wavelengths >500 nm.

Compounds of interest can interfere with assays in multiple ways, some of which are difficult to predict. There are two main mechanisms of direct interference of a compound with a fluorescent assay: quenching and autofluorescence.

A compound can absorb light and attenuate the intensity of, i.e., quench, the excitation or emission light from the assay. The unwanted interception of light entering the assay system or leaving the assay system will affect the readout and could lead to false positives or negatives, depending on the particular assay design. The efficiency of quenching is proportional to the extinction coefficient of the molecule and its concentration in the assay.

The second main mechanism is autofluorescence, as some compounds found in libraries are themselves fluorescent. If the compound fluorescence overlaps with the region of detection in the assay, the compound can cause interference. The extent of interference will depend on the concentration of the fluorescent molecule in the assay and the concentration of the test compound and their relative fluorescence intensities in the assay conditions. Choose the best assay format based on fluorophores coupled to a target or compound based on quantum yield φ:

Φ = (# of photons emitted) / (# of photons absorbed).

Additionally, a compound that is very fluorescent can cause blow-out of the signal in adjacent wells, sometimes referred to as the halo effect. Fluorescence assays are usually run in black plates that absorb some of the scattered fluorescence and limit scattering to adjacent wells to minimize this effect. Nevertheless, it is advisable to follow up any primary assay with an orthogonal secondary approach that uses a different modality (e. g. fluorometric vs. absorbance or luminometric) or format, which requires a multi-mode detection device.

Just because a compound is fluorescent or a quencher it does not mean that it cannot also have relevant biological activity. It only means that having an orthogonal method to confirm the activity that would not be subject to fluorescence interference is increasingly important.

Bridge the gap between flexibility and sensitivity in assay miniaturization

Assay miniaturization helps to reduce the consumption of assay reagents and consumables, which can be very expensive on any platform. Challenges in doing so include the change in surface-to-volume ratio, lowered sensitivity and low volume dispensing of materials. For instance, the surfaces available for nonspecific binding increase relative to the volume when moving to smaller volumes.

The smaller the volume, the less the amount of product-sensing material can be added, which reduces the sensitivity of the assay. Therefore, changes in pH and subsequent shift of detectable wavelength peaks require a suitable detector.

Tecan’s Fusion Optics®³ helps to bridge the gap between flexibility and sensitivity. QuadX-Monochromators implemented together with filter optics in the fluorescence module ensure excellent sensitivity for standard fluorescence intensity (FI) measurements.

Hence, for critical applications with weak fluorescence signals that require very sensitive detection, the signal can be boosted by combining the monochromators (MCRs) with filter-based optics for either excitation or emission in the very same measurement.

Increase throughput by automating part of the workflow

Assay automation can speed up routine operations and boost throughput by reducing human error, resulting in increased statistical data output. Microplate stackers help to reduce analysis times, improve reliability for routine applications, and enhance productivity in the lab with automated batch processing. The Spark-Stack™ integrated microplate stacker allows you to automate typical workflow steps like plate loading, unloading and restacking for absorbance-, fluorescence- or luminescence-based measurements.⁴ Dark covers protect light-sensitive assays.

Readouts can also be coupled with intelligent software design, making it possible to schedule runs based on certain assay conditions automatically. SparkControl™ software allows for the straightforward and flexible set-up of virtually any measurement protocol. All these measures are beneficial in fostering assay development.

See it in action

Find out more about how Spark® can help you reduce your assay development cycle times.

References

- Assay Guidance Manual. Eli Lilly & Company and the National Center for Advancing Translational Sciences, 2017.

- Battle Heat in Your Lab. (Application Note) Tecan, 2017.

- Evaluation of the Spark® multimode reader for far-red fluorescence emission. (Technical Note) Tecan, 2018.

- The ingenious Fusion Optics™in the Spark® multimode reader (Technical Note) Tecan, 2017.

- Spark-Stack™ Technical Note integrated microplate stacker. (Technical Note) Tecan, 2018.

About the author

Michael Fejtl

Michael Fejtl is a Market & Product Manager responsible for the multi-mode reader portfolio at Tecan Austria. Michael gained his PhD in Neurobiology at the University of Vienna. He then spent six years as a research scientist in the US. Following this he joined a university as head of electro-physiology, looking at multi-electrode array issues. Next, Michael entered the pharma market for automated patch clamping before working with micro plate readers and developed a special interest in cell-based assays. Michael joined Tecan in 2012.